The state of play for AI in business right now

Andrew Chatfield, Founder / Principal Consultant A.I. Sherpa

“Somethings are not obvious to everyone” Andew Chatfield “Value distruction comes with hugh opportunity” How to be part of this:

- Ecosystem (locate yourself - are you are (1) tool maker - builders (5% of room) (2)Productisers - take tech and combine and shape for others to use (15%) (3) Market shapers - Help and guide how the industry will develop: Including Investors, Governments and International bodies (including regulation), Academia, Media, Consumer Groups (10%) Note: remainder % users and the silent majority

UNICORN - the “Killerapp” 👉 Caution if your breakthrough is based on tech in flux. OpenAI may not carry it forward. “Like willing the lottery, not something you plan to do but something that happens to you.”

👉 Investment community excited - If plan to launch, timing is good

SUPERCHARGERS - Existing businesses 300% more of these than Unicorns Existing products, made better.

SLASHERS - Using AI to reduce costs 👉Large wave of disruption - putting a boat on this rising tide. Timescale is human adaptability

Technology might jump forward in 5-10 years, but behavioural change is over the decades.

Product companies - be brave. The big guys are very busy and hard to take on new ideas and thinking. Even the obvious AI features. They have KPI

Huge market and so many opportunities across all markets including small geographies

“Get involved, be brave” andrew@aisherpa.com.au

Business and Strategy – a conversation

Mathew Patterson (Help Scout), Matthew Newman (TechInnosens), Andrew Chatfield (A.I. Sherpa), Scott Burns (ABC), moderated by Mark Monfort.

“Blockchain and AI have alot to do with each other, as we will discover” Mark Monfort, Not centralised “Hype is high so we focus on responsible use and safety” Matthew Newman, Techinnocens

Hot tips: Mathew Patterson (Help Scout) “Hugh opportunity for AI to translate poorly phrased questions. Conversational interface will help many companies. understanding and managing incoming support needs (categorising, tagging) - Currently manual tagging - AI in this space - Better customer experiences!” Other uses: Sentiment analysis (priorising (up or down) angry people, Answering the question" Use case 1: better search over a body of work in a more natural way (conversation) Sentiment analysis. Take bullet points and expand/describe more, vice versa.

Matthew Newman (Techinnocens) Navigating the risk space (Foundation for success): Using third party “magic solutions” Differentiate with better products fast moving space need to marry need / business with technology so not tech looking for a problem One side: Who you are and opportunities: Understand competitors, market, appetite internally, going to market Opposite side: Risk assessment (type of service delivered, market delivered to, social contract) Top: Tech forms ceiling - less mysterious the better (engage Data scientists with business leaders) Floor: How able are you to execute on this? Capability, translation to business need, and if using own data (is it usable?) and how it deals with social contract.

Don’t be led by solutions leading the problem.

Scott Burns (ABC Machine learning): Addressing the challenges of integrating tech. Tech complex and complexity of integration! Pace of change, adoption pace (set by humans not machines) - different teams, people, financial structures, players, complexity of organisational change. No one core group. “Must be collaborative!” Reputational risks - with trusted brand

Because it’s collaborative, it will take time.

Example ABC brand trust means changes will have to be communicated in very clear way.

“Put people first. Consider people this is effecting and the anxiety around change. Take slow and easy with empathy for better adoption.” Scott Burns, Machine Learning, ABC

If it’s not introduced well, it will sit on the shelves and not get used (even though it’s a much better solution).

Andrew Chatfield, AI Sherpa “Your first product is going to be sh##” Thinking one jump to a chatbot is a fallacy. Change happens at human scale. Focus on going on the journey with your stakeholders, your own experience and company IP is very valuable and provides context for the development

(^side note: this is the answer to the question I’ve been asking as to why is everyone doing Chatbots…)

Technology (the end product) will give you better ways of doing things, but the real value is in the journey building with it.

TIPS ✔ Money and time and expensive to develop ✔ Backend systems are a barrier to scale - tools only as good as your ability to scale (need infrastructure) - risk it will become a black box ✔ AI is very prone to black-boxing, so think about observability to build trust from the get-go. Build the infra to support it. ✔ Have a hybrid team - need good tool makers to think of long term view of infrastructure needed ✔ Need to have a “grown up conversation” with developer to avoid being sold ‘snake oil’ - decisions would be taken for you by others. Ask what decisions are being made on your behalf (e.g. how product works and how interests) “Idea:‘Snake or GPT” build a bot that gives you bad advice" Mark Monfort

Beware of commodity trap. Eg. MS O365 providing AI search over your docs, but how will they charge when everyone is also providing that? Commodity trap.

Commodity trap = Everyone will need to have AI search, but no one will be able to charge for it. “Table stakes”

Long term down trend of higher value services at cheaper rate.

How to choose the right horse: Snake oil real - AI not new. Check that product is upto date and not companies riding the hype cycle Evaluating your AI product build: how do you know that you’re building it right? Engage deep domain knowledge, both business side and ML side. Humans are a limiting factor - need to know your pain points, avoid companies who can do everything for everybody, ability to help implement is as important as technology itself. Look for specifics - What is the problem that will be solved.

"Programming for HTTP was extremely accessible. Similar experience with ChatGPT - ability to try before buying the production version. Take advantage of the accessibility of this technology"(Andrew Chatfield, AI Sherpa)

(Programming on notepad.. heh. It was accessible but it sure was a lot of pain fixing your spelling mistakes, no spellcheck or linting back then)

One possible side effect: human interactions becoming more valuable. How do we add human interaction in the way that it adds value, once rote work is eliminated?

“Create an ethics board and involve all stakeholders while breaking down silos. We need a change of thinking and understanding the goals of the organisation. Open the box to see what can be achieved through experimentation” (Matthew Newman)

“Decision making requires alignment of shared valued - and understanding how this impacts the organisation (eg costs, people, customers). Decisons need to be values based!” ABC Journalists keeping true to the mission. Scott Burns, ABC

SLASHERS - Do not want to fire anyone! Important to understand cost saving from different angles. Consider the engagement and well being of staff by removing Supercharging what we do well Engagement and well being of staff by removing menial tasks Grow without hiring so many people “Buy back more time”

The technology landscape

Kazjon Grace, Lecturer in Computational Design University of Sydney

“This conference offers mental models as a solution for dealing with the wall of noise.”

Investigating: Interaction of AI and interactive systems. What is Generative AI and where did it come from?

- Data points and relationships - Predictive analytics to assist decision making

- Example 1: Predictive analytics using ice cream vendor example.

- Machine learning “Can you design for me an ice-cream flavour that has a machine learning theme to it?”

- AI is getting better at making stuff (Association for Computational Creativity Research Community - https://computationalcreativity.net/)

Example 2: handwriting generation. Augumenting humans - Graves 2013 “Generating Sequences with Recurrent Neural Networks” At or near human performance - https://arxiv.org/abs/1308.0850

Documents –> Black Box –> News or not? Predictive problem and categorisation

Documents –> Black Box —> Titles? Information largely in the inputs. Domain knowledge comes from somewhere else (e.g. news articles)

Documents –> Blackbox —> The last few lines? Need expertise but still most information in the input (e.g. strong emotive statements that are more than just summarising what was there before). Complicated to do well.

Titles –> Blackbox —> The whole document? Invent content. Halluciation

Pure classification … adding a bit …. adding alot … Ratio: Stuff available to stuff not available

2 critical changes: 1. Getting the right answer (correctness) vs getting a plausible one (give up on perfection and “right”) 2. The ratio of information in the inputs vs outputs (how much information in the blackblock rather than the input)

✨ Key message: Generative models are still “just” predicting the answer.

Not just opposites: am I analysing, or am I synthesising? Examples in 3 spectrum: - generating title of an article - generating the last paragraph - generating the whole paragraph How much info has to come from the black box, how much from outside?

Autoencoders - same input as output (Variational Autoencoders (VAEs) Box: Restrictive needs to learn patterns, Reproducing the input Can throw away the input and generate more stuff (trained to reproduce)

Like Autoencoders, but with 2 models. Generative Adversarial Networks (GANs) - Arms race between forger and detective. Box: Starts from random noise. Does it well by using 2 models. 1st the forger. 2nd is the discriminator (contrasting images)

Diffusion models Diffusion models turn noise into images step-by-steps (Elephants with 4 trunks) Box: Noise –> Mostly noise –> better image (more CPU cycles and advise which step you are up to)

Transformers (LLMs) Text has order (temperal structure). Images don’t have an order. Box: Work by modelling attention. Prompt –> Blackbox –> output (loving headings) Challenges: Different languages order things differently.

Order of words are different in different languages. This poses a problem with one-at-a-time word translations.

<Interaction design patterns. Watch this space! Another conference>

“The key is that most of your customers have a simple model either using tool or talking to person. Lead customer along the path that they already understand.”

Quote: “AI needs to meet somewhere in the middle of maximally unobtrusive tool versus collaborator” Kaz (USyd) (The need of new interaction design)

The ins and outs of LLMs

Paul Conyngham, Co-founder Core Intelligence

What are LLMs (GPT) how do they work?

- Machine learning model (math function): Input –> Model (transformed) –> Output (e.g. action)

- GPT Language modelling technique simply predicts the next word (predicting the next most likely)

- By taking the sequence of the sentence

Steps on how to build a (chat) GPT? The Internet + Training (taking sequences to predict next word) INPUT (The cat sat on the) MODEL (Internet + Training) OUTPUT (completed sentence)

OpenAI Playground - circa 2020

Instruct GPT - Aligning language models to follow instructions: https://openai.com/research/instruction-following Https://arxiv.org/pdf/2203.02155.pdf

How to build GPT apps

-

Context window is the maximum “working memory” of an LLM (equivalent to “short term memory” +- 30 seconds) (input and output included in the count) GPT3 (4K), GPT4+ (32K) … Claude2 (100K) Webdirections - Conffab: https://conffab.com/

-

System prompt: Question and answer data sets –> GPT good at following instructions All GPT models have a “Hidden instruction” called the “systems prompt” “Imagine you are … “Speak like a pirate””

-

Vector databases: Equivalent of long term memory of LLM based system Beyond the scope of this talk, but essentially Data represented as numbers -> Helps solve hallucination It’s as if you assign coordinates to semantics, that way you can get the model to generate in the context space that you want. More accurate (and cheaper) than nudging it with 100000 tokens everytime.

RAG TECHNIQUE: Vector DB <-> SYstem prompt: You are an expert at answering questions. Please anser

Data (Files) –> Encode –> Convert into vectors (Weaviate) –> Query DB

- Fine tuning Create your own set of training data, questions and answers, and feed it to LLMs to train it to your needs. Very bespoke. (It’s also very labour intensive fyi)

Quote: “This tech is paradigmn shifting. If you have a IF then process you will traditionally have a human at end for noisy evaluation step. Machines can now do the evaluation step and deal with the fuzziness.”

Agents and Auto-GPT

Mark Pesce, Broadcaster and Futurist

Autonomous agents are not new (1987 example)

Generative Agents: Smallville example, where 25 agents are given roles and told to throw a Valentine’s Day party.

8 invitations were sent. 3 declined because they have something else going on. 1 agent offered to decorate the cafe. Things emerged organically.

Example 2: Web Directions AI agents. Demo of Observe-Reason-Act chains.

AutoGPT Example 1: “Open the pod bay doors” Break it down into a set of actions.

- Search for pod bay door opener docs

- search for comms API

- write Python module & unit tests for API

Example 2: generating manipulation of 2024 US Presidential election. Release the kraken!

“It works now. Not perfect but good enough. We can go back to those dreams and make them real. Like the 1966 Star Trek talking to the machine and Alan Kay’s early visions” https://www.ted.com/talks/alan_kay_a_powerful_idea_about_ideas/transcript

Machine Vision

Arafat Tehsin, Senior Manager EY

The state of machine learning and how we can incorporate it. Maximise your business outcomes with next-gen computer vision

Need multi-modal approaches - need tech to recognise object, understand scene, understand emotion and situation

Large foundation models (World of Multimodality) - Space, Time - trained on open world recognition (Recognised object categories)

Project Florence: foundation model for Machine Vision. Trained on open world recognition. More than one capture in that image, so not just recognising type (human, cats etc) but also where it might have been sourced from.

Case study 1: How to manage digital assets Search optimisation - Image retrieval, Automatic, dense captions, Background removals (suggested description for pictures in Reddit is an example) –> Image Analysis API (publically available)

Case study 2: Enhance safety and security Infused by vector search tools: Video search, Video Summirsation (can search using natural text - significant time saver) Can also generate summary of the video.

Cast study 3: Automate Retail Operations - https://azure.microsoft.com/en-us/blog/build-next-generation-ai-powered-applications-on-microsoft-azure/ Model cutomisation and product recognition (Can fine tune) + add own custom images See: Azure AI Vision Studio –> Summarising the video Run a test: Summarize video, Locate specific frames using search query : Add search query [This can not be done by Bard just yet]

Quote: Azure - get access as an aliance partner, University can access free of cost. “You can go some distance by not too far with no-code.”

case studies

- Thierry Wendling–LLMs in education “What it takes to take it to prod”

- started with zero-shot. No good, not as easy as classification tasks (this has a spectrum of correctness)

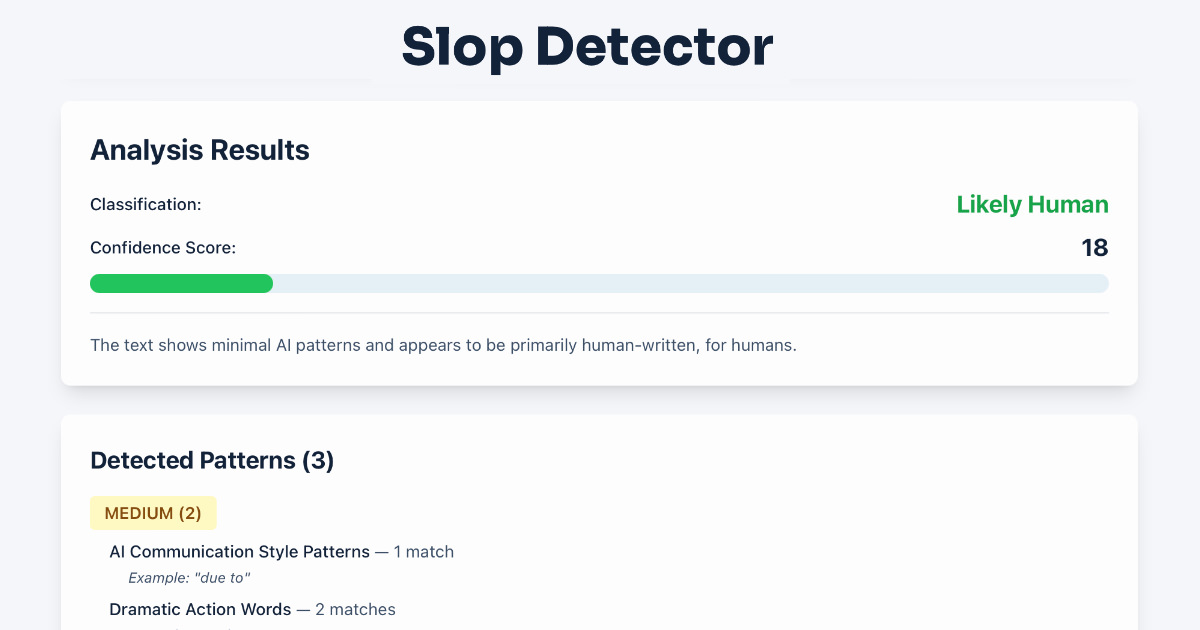

- GPT-4: does pretty well with few shots, some reasoning, but not fine-tuneable and so capped by rate limit. …see image of Comparison of LLMs for full stats Microsoft paper: Want to reduce labeling cost? GPT-3 can help. For fine-tuning. Few hundred examples with high quality training models.

- Anna Dixon–ABC’s AI experiments Case study: localised weather bulletin Erika, a local radio broadcaster, has to juggle lining up the program and fitting in local weather in-between. The listener,David, has a problem with having to listen through things in-between before getting to what he cares about: local weather update (instead of the whole QLD) Experimented soln: the flow of data

The Script Weather forcast –> LLM (GPT) –> SCRIPT OUTPUT —> Audio file (Synthetic voice) –> Dynamic insertion (Internal system) (to make geo specific) —> insert bulletin into correct program

Quote: “First thing for experiments to is be lazer focused on what you want to learn”

Things to learn: What prompt, Accuracy, Cost (esp when scaling), data management, audience response. Involve stakeholders as “part of the show”. Anna Dixon - Stay tuned for Anna’s presentation at the Summit

Quote: “GPT unlocked many things. Everyone now wanted to work with us! The easy to use chat bot was a way in with decision makers” Anna “Appetite changed overnight for these experiences” Anna

- Ivy Hornibrook–Canva’s new AI ‘magic design’

💭“Every knowledge worker ask themselves how volunerable am I to this world of AI” Ivy Hornibrook Usecase: Templates are generic –> transition with AI to making it personalised

💭 “AI is new. User problems aren’t” Canva 💭“Interaction mode of prompting can fight against decades of human conditioning” There is no one shot attempt at inspiration. Inspiration is a conversation with stimulus" 💭“New ways of creating value. Iterative nature of human creativity shouldn’t be shortcut but fasciliated”

Fundamental nature of creativity doesn’t change.✔

Good design is good solution within well-constrained problem.

In the AI space, your problem changes in real time.

Issue: Consider the impacts on user Issue: Users struggle with prompting 💭“What to design and what to teach has rapidly changed. Design for the future rather than today’s restrains.”

❤ Rather than design for constraints, design for the future.

(How?)

Spike, measure, learn. (Short iterations) Build, learn, spike, learn Measure, spike, learn Release, measure learn

Always consider user motivations. 💭 We don’t have the intuition yet.

“The future is already here, its just not evenly distributed” Wiliams

“Even a month is too long a bet without de-risking it” Ivy (Canva) Everything is new again. Standing on the precipice, peering in the moment of AI eating the world.

- Mihail Dungarov–Applied LLMs in Finance

Finance is a highly regulated industry - London Stock Exchange G (was part of Reuters) Research, news, customers in trading, investment, wealth, risk and academia “1 Day = 5Gs trading data”

Problem question: How can we help people deal better with information overload? To enable focus and identify the important/relevant information to be digested.

Topic Sentiment Analysis - SEntiMine www.lseg.com/en/labs/sentimine

TSA feeds into –> Workspace Apps: Signal Search (Screenshot from LSEG Workspace) Colour code: Green (Positive), Red (Negative) - prioritise topics by popularity, extract statements relevant to particular topic and themes Seperate out by who is speaking in the conversation.

LSEG Workspace Apps: Search Query Expansion Usecase: Exapnd customer queries such as WACC (Weighted Average Cost of Capital) with relevant synonyms (such as capitization rate).

Powered by LLMs - some version of training, fine tuning, choice depends on usecase. “The more you explain the topic as you would to a new graduate the better result through GPT” Mihail

(Not the speaker’s note: in the short term, the market is a voting machine… maybe now we can watch the vote in real time)

A prompt engineering deep dive

Tanya Dixit, Pouya Omran, ML Engineer, Senior Data Scientist, Crayon

“There is no golden prompt depends on the LLM and specifically the problem you are trying to solve” Tanya Dixit

Usecase: Call centre analytics (e.g. call summarization, sentiment analysis, compliance detection, probability of sale analysis, opinion mining (deeper than sentiment analysis))

💭“Even if transcript is not accurate can still do much with LLM (semantics)”

Different sets of NLP Tasks/problems mapped to LLMs 1/ Direct Prompt Approach Harness the power of LLM with Azure Machine Learning prompt flow - MS Community Hub https://techcommunity.microsoft.com/t5/ai-machine-learning-blog/harness-the-power-of-large-language-models-with-azure-machine/ba-p/3828459 Use LLMs to post process to execute directly

Direct Prompt approach: suits unsupervised and few/zero-shots classification tasks.

Prompting is not just one LLM call. LLM Stacking and chain-of-thought patterns.

Embedding approach: transforms text into a vector reflecting relations between texts in a multi-dimensional space. We can reuse this in other ML techniques like random forest etc.

2/ Embedding-based approach (good way to cut costs and reduce latency) Embeddings reflect semantic nuances Translate to vector Unspervised Tasks, Supervised and Hubrid tasks

LLM Comparisons on factors that matter! LLM | Cost |Num Tokens | Num params | Cloud |Open-source J2-Summarize | Phr | In:50,000 char | 178B | AWS Jmpstart

TIP: Tokens effect output quality after a point. Break into small parts to reduce this risk

Concept: Attention mechanisms

On intuitive level: Prompt serves as a query along with some context to an LLM Note: works best on an instruction-tuned LLM and depends highly on how a model is trained

Using Davinci (does more than chat based tasks) Parameters matter: e.g. temperature (decides the creativity of the output) Temp 0 provides most stable output Prompt: Summarize the text: [Transcription]

gpt-350turbo Use for Chat based tasks Need to change the prompt to finish within 100 tokens. Improve by: Reducing Temperature to 0, Change max length to 200 (worse outcome)

❤ “Even colons make a big difference!”

TIP: Make prompt clear regarding instruction, input and output required “Summarise the following transcript in one paragraph”

Davinci003 model more accurate than GPT3.5 Turbo but with effort accuracy improved.

Usecase: Ask your data Interact with your data using natural language 1/ Direct or few-shot prompting (Retrieve-then-read) 2/ Chain of thought prompting (Chain of instructions, reasoning and sometimes actions) –> breaking down a big problem to smaller ones (1 query broken down to smaller ones)

Each LLM has a prompt guide available Look at characteristics (e.g. training data, context length( How to evaluate? Validity (degree of hallucination), format, stability (same on multiple runs), safety (working with many users)

LilianWeng: https://lilianweng.github.io/posts/2023-03-15-prompt-engineering/ Project: https://80000hours.org/ (building own vector database to help school leavers)

Embedding (in a high-multi-dimension/vector space) reflects semantics, like how words are related to each other and understood within context.

Checkout the Latent Space podcast! To help develop your intuition. https://www.latent.space/podcast

Closing conversation: Risks and challenges

Michael Kollo, Paul Conyngham, Bec Johnson, and Raymond Sun

Ajit Pillai (USyd) Bec’s challenge: “Values embedded and reflected in traiing data and prompts - how to account for ethical value pluralism”

“WE know the systems are bias. partcly because of training data, also other aspects (e.g. biases and world RLHF Inherently human systems and reflective of us. How do we make sure that the systems are reflective of the values you want to espouse

Michael: “Not really sure of outcomes until out in the wild. Can’t fully understand the effects until released. Hard to pull effects back - on a ride and catching speed”

Ray: (a) tech lawyer HSF - Advising on regulatory issues, review and write contracts (b) developer - dance tech space (c) create content on social media to educate on AI regulation. Website to track AI regulation around the world: https://www.techieray.com/GlobalAIRegulationTracker.html “How do you regulate AI and balance between innovation and safety” The most difficult thing is there is no one right answer. Depends on the regulations and customs of the country.

Paul: “With agent generative technologies will e hard to tell what is real and what is fake. Are you human or a bot?”

Human rights - Use a vector database to address human rights safety. Need pipeline Issue –> Solution What does you application do? Risks? then solutions (Tech, people and process) Need people to follow policies and procedures. Documentation does not do anything must be followed (training)

Not anticipatory model, because we’re pushing boundary

How can we create an anticipatory AI?

-

Protective - automating prediction, need to "keep doors open to humans". Close loop of auditing on the impact of these systems -

(Side note: slippery slope to predicting the likelihood of, for example, someone committing a crime and using the prediction as if it's already happened, like in some sci fi movies. Presumption of guilt.)

Consider: Bias / Perception of bias; causation

“Don’t undervalue commonsense: What can go wrong? Simple at first but can go a long way.” Raymond

“Bring in stakeholders - keep the door open to diverse sets of people impacted by the technology (diverse experiences/perspectives)” Bec Johnson

How to ensure transparency when incorporated in organisations?

Explaining and transparency needs to be in disclosed upfront and embeded in process AI Legislation (UK) - “AI system provider”

Mechanistic interpretability - explaining black box models Paul Conyngham Can add human tests at the end, but there are always things that are not considered.

“Giving a mathematical explaination is not sufficient for addressing the problem. Concepts of FAIRNESS are important and very human(implicit decisions). Reframe to “why not me” explainations” Michael Kollo

Concept: Meaningful explaination

Bec Johnson: “Australia can be very active in this space to ensure fine tuning to ensure values reflected”

“We are not starting from perfection but something that has a distribution. The benchmark is what I can resonably achieve with other resources. Risk is not meeting those objectives. Not technology binary code but more human” Michael Kollo

EDUCATION Needs to start very early. “Important way to create workspace literacy is giving staff the time to ‘play’with models.” “From a social perspective we have a poor dialogue on AI. Most people see it as a threat, difficult and uncertain. This affects adoption.” Michael Kollo “Reeducation needed on the social fear narrative.”

Future about: Critical reasoning and language modeling; Future co-pilot, need a strong thesis that this will improve human reasoning.

(University of Queensland - Citizens survey) - Risk awareness and benefits. Risk aversion

Australia ranks in the middle in terms of risk awareness, but at the bottom of recognising the potential benefits.

Workers, worrying about impact on jobs. Don’t let it be another Brexit that resulted from 10 years of painting EU in a bad light.

Strong thesis on reasoning that will help people to see AI in a better light. Social narrative - difference between adoption and burning it all down.

“We are now at the 1994 moment for AI. We need a similar education program now and to get good at GPT skills - learning, creativity, critical reasoning programs.” Paul C.

Critical reasoning.

Who is liable/responsible when this goes wrong? Depends on the claim. Different claims different responsibilities: EU Liability directive - Deploying entity is presumed responsible for the system regardless whether they built it. (Regulation, contract layer) Rest of the world does not have a clear position on this.

Autonomy question - informed decision

Risks of putting sensitive data in chatGPT (confidentiality)

- Sensitive information stored and potentially reused.

- Breaches privacy, data protection, privilege (= disclosure and therefore breach)

- Casestudy: Samsung employees https://www.theverge.com/2023/5/2/23707796/samsung-ban-chatgpt-generative-ai-bing-bard-employees-security-concerns

- Disclosure prevents patent protection

- Message: can use chat but redact sensitive information

Options available to deploy the technology (Paul Conyngham) Optout Following a maze of links in links Own cloud instance - Azure cloud Train your own models

Intellectual Property OpenAI law suits on taking information and copyright material “ChatGPT - Democratised cheating. Useful for scratch pads. Auguments grammarly, definitely needs to be checked. We need to have a bit of a paradigm shift - getting over individualist learning to more collaborative and there is scope to do that with new tech.” Bec Johnson

❤️"Teach people that this is a creative tool not a search tool” Bec Johnson

“Last generation of students being taught by humans.” Michael Kollo “Text is atomised and then reconstituted. Until we have a really fine toothed comb”

Tech solutions: Pixel blocking, Tagging data (Meta) –> still underground so watch this space.

The AI Startup Landscape

Annie Liao, Investor Aura Ventures

VC take on the GAI landscape Market map - each layer has a different considerations, competitive advantages How does this look like for Australia? Opensource mapping exercise - trends: Not to many in LLM area (expected as capital intensive) Most in industry verticals and business units (Apps) - Entry barriers very low (Leonardo AI - GAI - for Gaming assets), Data as a service, Mining (Heavie)

Where people are building? 50% of start ups in Sales/marketing (less black and white), Healthtech (industry where data is historically fragmented), HR and Fintech

the barriers of entry has never been lower for the Application layer.

Picking winners 1/ Is there a real pain point? 2/ Founder has unique insight or domain expertise? 3/ How long to unlock defensibility (data moats + business context)? or just a wrapper around gpt? reinforcement learning over time?

Future predictions –> Latent space AgentOps (intelligence, memor, tools and plgins (basics of what makes an agent) –> communication between Agents (vector space? agents market place? nexus AI)

AI Co-pilots –> Autonomous AI Agents –> AI Agent fleets

##The Big AI Mixer Web Directions AI: AI Startup Showcase Nathanael: contentable.ai Path to purchase relies on product information Problem: 30% purchasers are dropped also undiscovered products Solution: End-user to enrich

Mathew: Sahha (Health company) Solution: Apps on mobile device related to health provide insights but this offers follow up and patterns (using predictive modeling to determine how effective intervention)

David Turner: Lext Problem: 85% huge access to justice Launched yesterday: App.lext.au Solution: realiable consistent summarise and review documents

Meead Saberi: Footpath.ai Device: UNSW spinout Pitch line: Used google map good for cars but walking underrepresented. Collect imagery data. Capture and train - benches, bike racks etc. A different type of map - not just for cars but humans.

Sean Marshall: Platformity Improve Local government asset management. Help people with visual disability. Buses used to collate the necessary information. Real time workflow, very tree, bench etc. Improve the citizen experience.

Janhvi Sirohi: Outread Context: Research papers locked behind paywalls. Rank papers by metrics and make accessible

Jeroen Vendrig: ProofTech Assets - Cars, insurance, fleet management (capturing damage) Problem: Determining whether and how to address repairs (speed of workflows, decision making, what needs to be done to rectify damage) “Actionable intelligence” Deck finished for pre-series A

Christophe Garrec: Choosely Problem: Helping with group decisions (e.g. buying a group present) “Variety is the spice of life. Choosely is all the way through the decision process” From information, decision to purchase

Kunal Vakadara (Haast) B2B brand and regulatory compliance obligations Working with superfund ASIC University to monitor university partners Telco - consumer law LLM allowed companies to upload, regulatory compliance Compliance coach Closed Beta trial - regulatory compliance

Nilu Kulasingham: stori.gg Collaborative game development Building a github for law/lore(?) Funded: AWS AI accelerator

Paul Conyngham: Chris Problem: Accountant charing for error as well as to fix it

Chris Anthony: Skillojo (Launched 7 July 2023) Linkedin + Tinder Problem: Being rejected for work. Many blockers between job seekers and jobs. Helps with recruitment.

Tony Mercier: Artization Problem: Buying and selling art, measuring how people respond to artworks. How: sensors on the ceiling and near artwork, can determine how long people look and how they are reacting Ask: Looking for additional developers

JP Tucker: copydash.ai Problem: Generate upto 1M product descriptions with one click. Search optimisation without paying high fees. In market - 5-6 weeks since launch

Conor Stack: Stack creative

Will McCartney: Habeas Legal research company Effective, timely (save time) Problem: Need to navidate very complex databases to find answers to simple questions Mission: AI to promote critical, analytical thinking. Ask: Pilot program

Gregory Hunter: Arbiter Technology AWC Idea: Automating the security process, scalable HR Use regulation as way to determine which site needs which security Demonstrate: BHP IDEA: First responder help

Keren Flavell: vAIsual Dealing with copyright issue for training AI