OSINT Symposium Day 1

Sept 18-19 2025

https://www.osintsymposium.com

https://www.osintsymposium.com/2025-agenda

The Chatham House Rule applies

The Australian OSINT Symposium 2025 brings together open-source intelligence (OSINT) practitioners, leaders, analysts, and researchers to advance tradecraft, harness AI, and strengthen intelligence capability.

The theme, Building Enduring OSINT Capability, addresses how to sustain OSINT at the individual, team, and organizational levels in an era of rapid technological change and global complexity.

Focus Areas

* Human capability – tradecraft, leadership, resilience: Strengthening practitioner and team expertise through professional development and leadership skills.

* Sustaining capability amid technology shifts: Adapting OSINT methods to automation, AI, and evolving tools while preserving operational effectiveness.

* Impact of capability: Demonstrating how applied OSINT informs decision-making, supports security and law enforcement, and delivers public-good outcomes.

Expert-led discussions, case studies, and workshops provide practitioners with practical strategies to develop sustainable, ethical, and adaptive OSINT practices.

https://www.osintcombine.com/podcast

——

DAY 1

Principles Under Pressure

Professor Peter Greste, Macquarie University & The Alliance for Journalists’ Freedom

Drawing on his lived experience as a journalist and press freedom advocate, Peter Greste reflects on the ethical weight of working with information in today’s world. His perspective, grounded in a discipline that has long grappled with questions of public interest, transparency and integrity, offers the OSINT community a powerful lens for considering how principles-based approaches can help build trusted, enduring capability

Core Theme

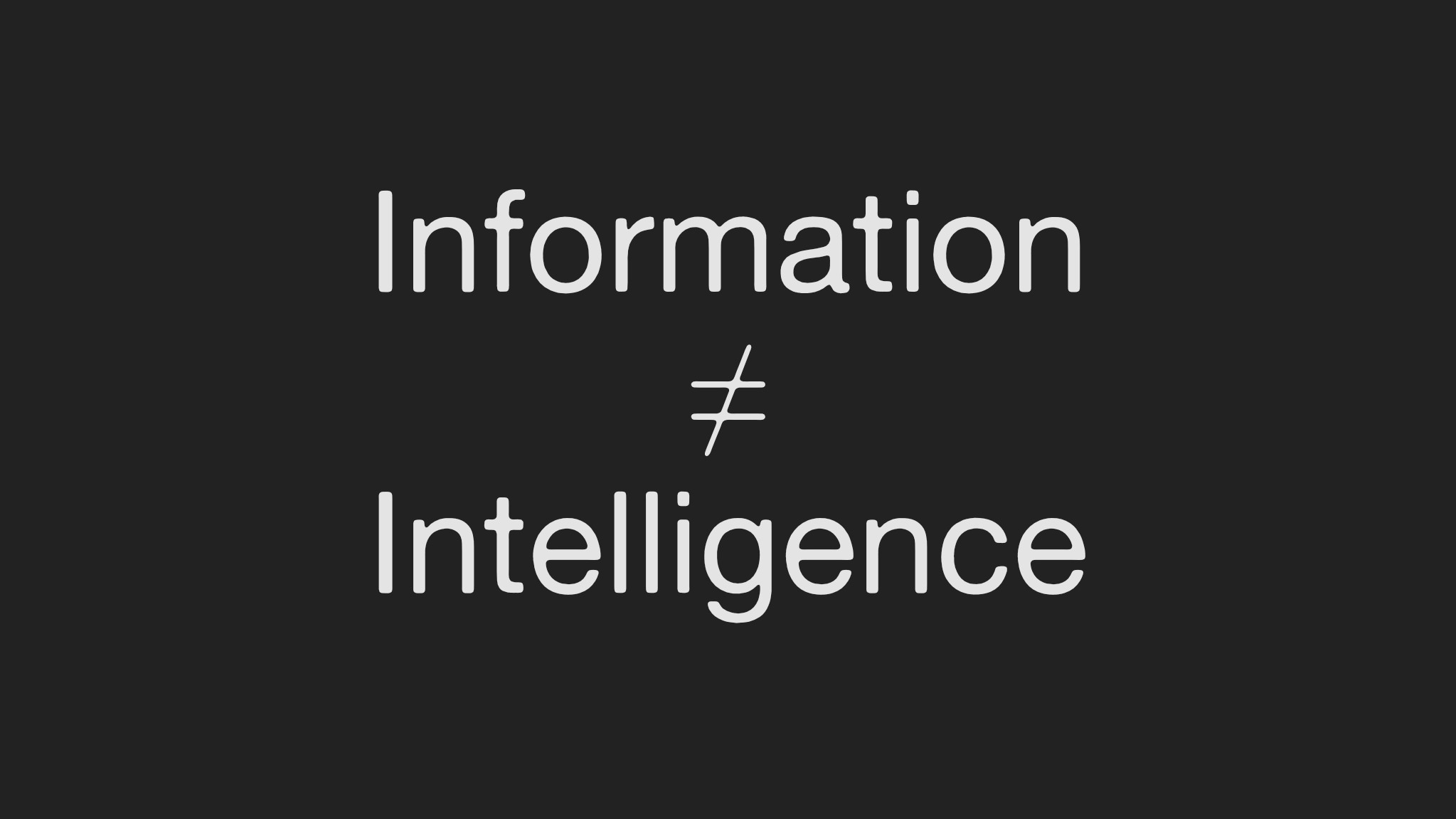

Peter Greste explores the ethical dilemmas of journalism and open-source intelligence (OSINT), emphasizing the responsibility of turning raw information into meaningful, trustworthy intelligence. He argues that information without context, verification, and ethical judgment is meaningless—and that trust is the bridge between journalism and OSINT.

Key Points from Greste’s Talk:

Purpose and Responsibility of Information

- Information by itself has no value until verified, contextualized, and ethically presented.

- Both journalists and intelligence professionals face the same dilemmas:

- What to reveal and what to conceal?

- How to balance public interest with safety and security?

- How to avoid harm while exposing truth?

Examples from Journalism

- Sudan: Publishing footage of war crimes justified transparency but risked harm. Interviewing rape victims raised ethical concerns about dignity and trauma.

- Kenya: Exposing corruption in aid distribution highlighted moral conflicts about disrupting aid supplies.

- South Sudan/Weapons trafficking: Publishing sensitive intelligence risked sources’ safety but was justified by public interest.

- Afghanistan: Choosing not to film prisoners, as their fear made consent impossible and accounts unreliable.

WikiLeaks vs. Snowden

- WikiLeaks (Assange):

- Dumped vast amounts of data without context or filtering.

- Raised awareness but lacked journalistic practices like verification and protection of innocents.

- Greste argues this was not journalism.

- Snowden’s NSA Leaks:

- The Guardian applied rigorous editorial oversight, analysis, and expert input.

- Balanced transparency with confidentiality.

- Greste considers this brilliant journalism.

Parallels with OSINT

- OSINT has revealed war crimes and informed the public (e.g., MH17, Ukraine, Afghanistan, Gaza).

- But it faces the same challenges:

- Risk of exposing tactical details that endanger lives.

- Protecting vulnerable communities from reprisals.

- Preventing misuse or disinformation.

- Principles based craft

The Fragility of Trust

- Journalism has seen its social license eroded due to loss of public trust, bad actors, and weaponization of information.

- Without standards, OSINT risks the same collapse in legitimacy.

- Both fields must embrace professional standards, transparency, and accountability to maintain credibility.

Q&A Highlights

- Balancing Responsibility and Truth: Journalists must be transparent with sources while staying true to their responsibility to the public.

- Publishing Graphic Content: Requires balance—avoid gratuitous material but don’t sanitize reality to the point of erasing consequences.

- Personal Risk: Journalists accept dangerous assignments after careful discussions on risks and value of the story.

- Disinformation Challenges: With the flood of online information, even fact-based reporting is dismissed as propaganda. Journalists must double down on rigorous fact-checking and transparency, while acknowledging they can’t eliminate misinformation entirely.

Conclusion

Greste closes with a clear message:

Information ≠ Intelligence.

Journalism and OSINT both require verification, context, ethics, and accountability to transform raw data into trustworthy knowledge.

With great access comes great responsibility—both professions must uphold trust or risk losing legitimacy and power.

Notable quotes

On the Value of Information

- “Information is nothing. Information alone is nothing. It only becomes intelligence when it’s tested, contextualised, and made accountable.”

- “Without accuracy, fairness, balance and integrity, journalism becomes just another voice in the noise.”

On Journalism’s Responsibility

- “What information should be revealed and what should remain hidden? How to verify information to confirm its authenticity and its accuracy?”

- “With great access comes great responsibility. Responsibility to be aware of the risks of disclosure. Responsibility to apply professional standards consistently. Responsibility to maintain the trust in the work itself.”

On WikiLeaks and Snowden

- “Journalism is not simply the act of making information public. It’s a whole set of practices that include verifying the information, editing it, analyzing the context, and granting it meaning and significance.”

- “WikiLeaks did none of that. They dumped everything online. All of it.”

- “Snowden’s leaks… I think it involved brilliant journalism.”

On Trust and Legitimacy

- “Trust is fragile. Us journalists have learned that the hard way. When we lose it, so too goes our legitimacy and our purpose.”

- “Our social license for journalism has become dangerously diminished. We rank right up there on the social scale with used car salesmen and pornographers.”

On Parallels with OSINT

- “The public sees extraordinary intelligence and technical capabilities, but what they really need is confidence in the ethics and standards that guide how those capabilities are used.”

- “OSINT might not serve the public directly, but without trusting your accuracy, your motives, your judgement, your work loses its legitimacy and its power.”

——

The New OSINT Arsenal: GenAI as the Analyst’s Force Multiplier

Chris Poulter, OSINT Combine

Discover how Generative AI is transforming open-source intelligence, from accelerating deep web research to detecting disinformation. Analysts and decision-makers will gain practical tools, integration strategies and insights into emerging risks, with guidance on using custom GPTs, agent-based tools and prompt engineering in real-world workflows.

https://www.osintcombine.com/post/how-to-spot-a-fake-analysing-inauthentic-content

Chris Poulter, founder and CEO of OSINT Combine, argues that generative AI is not a passing trend but a powerful enabler that can transform OSINT (open-source intelligence) workflows. He explores how AI can save time, improve efficiency, and expand analytic capacity—while still requiring human judgment, ethical standards, and digital trust.

Key Points

Evolution of Generative AI

- AI has rapidly shifted from text-only to multimodal capabilities (text, images, video, audio).

- Analysts must understand the origins, capabilities, and limitations of different models.

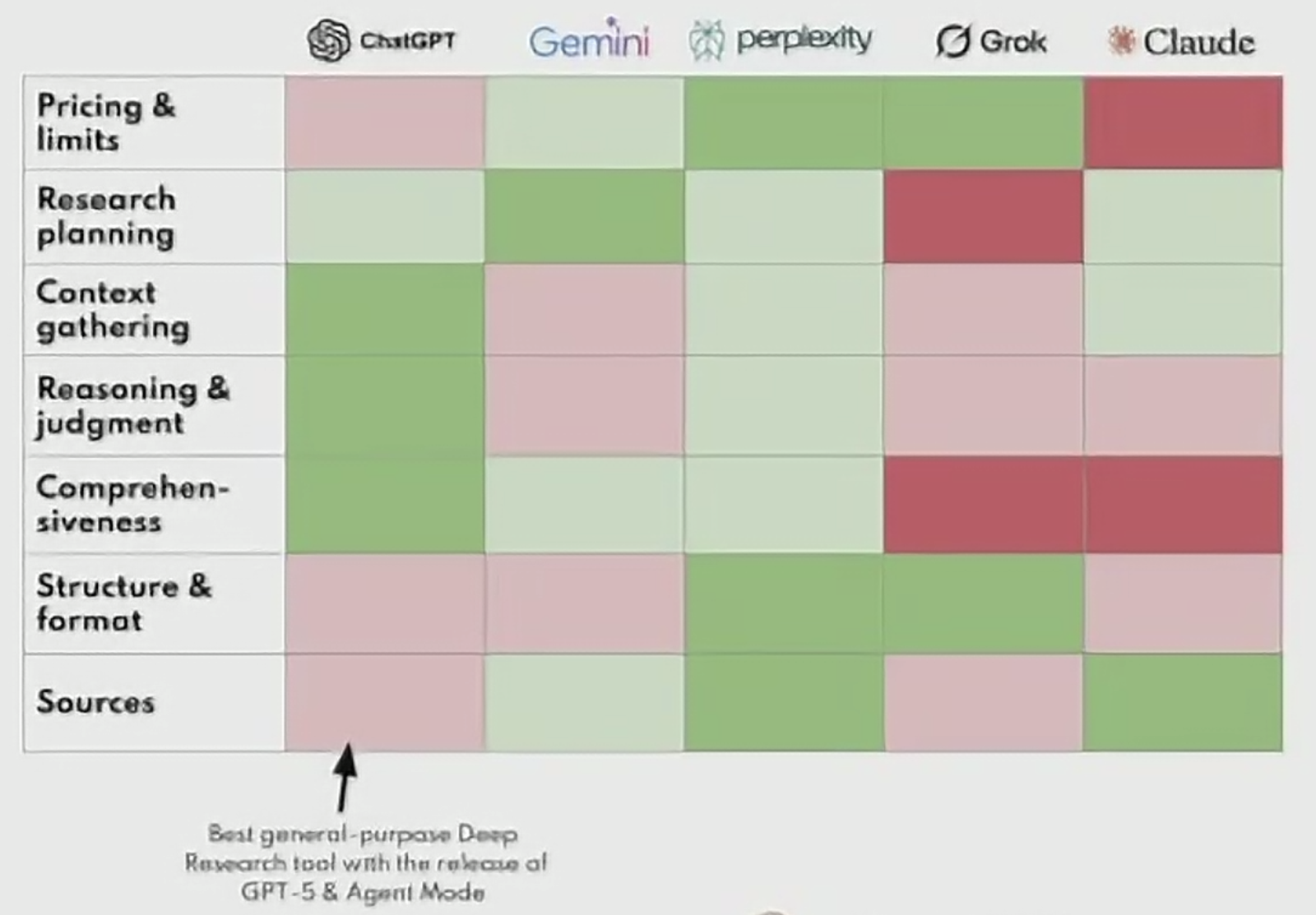

- AI literacy is crucial: not all models are equal (e.g., GPT, Claude, Gemini, Grok each excel in different tasks).

Prompting vs. Context Engineering

- Prompt engineering = asking good, structured questions.

- What are you trying to achieve? Direction → Context → Constraints

- Break into smaller parts & thinking steps: Chunking → Chain of thought → Formatting

- Context engineering = providing instructions, history, tools, and knowledge upfront to ensure consistency, accuracy, and repeatability.

- Building custom GPTs is one way to apply context engineering effectively in OSINT investigations.

AI in OSINT Workflows

- Search augmentation: AI can categorize and summarize results, saving analysts hours.

- Google Gemini - What’s happening: When you search “Name Company Linkedin” in Gemini, it interprets your words, reformulates them into a Google/web Search, and then summarizes or highlights the most relevant results instead of strictly applying Boolean rules. Not ranked. Hallucinations!

- Deep research modes: AI agents iteratively refine searches, self-check outputs, and improve reliability.

- Agent mode: autonomous systems that can navigate websites, bypass barriers, collect structured data, and generate reports — tasks that might take analysts hours can be done in ~20–30 minutes.

- Agent Mode is an advanced ChatGPT capability that enables the Al to autonomously handle real-world, multi-step tasks using its own secure virtual computerWhat can it do?

- Navigate websites using a visual or text-based browser.

- Log into accounts securely (with your permission).

- Fill out forms, click buttons, extract data - just like you would manually.

- Execute scripts or code snippets.

- Generate structured outputs such as slide decks, spreadsheets, or presentations.

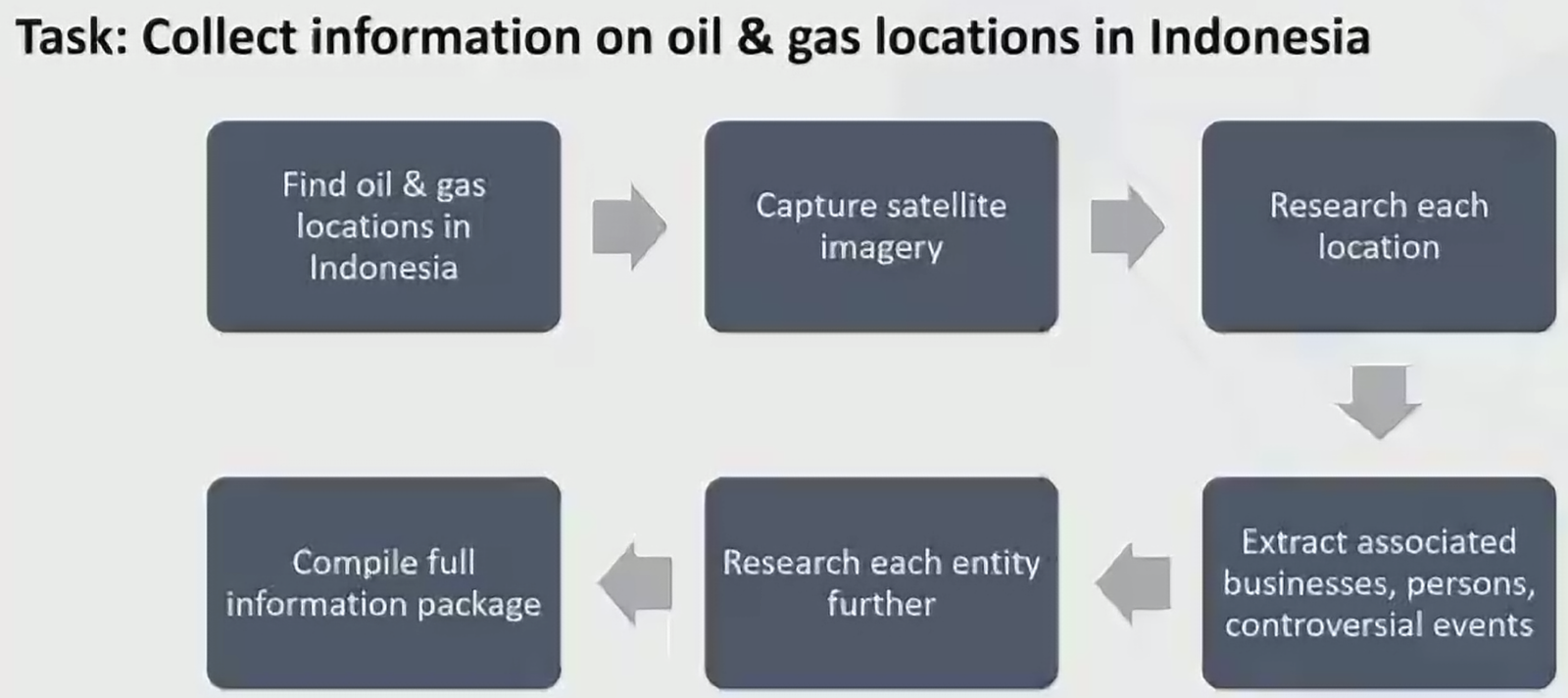

- Example: Mapping and analyzing gas facilities in Indonesia with AI agents.

- AI Agent Mode:

- Create query to find OSM data for locations

- Manually visit such site in Google/Bing maps, take screenshot

- Research each locations using web searching

- Extract people, businesses, events and compile for each site

- Research additional information on each person, business and event (rough research)

- Campile report

- Example prompt:

- Run this EXACT boolean query directly on Google.com - include the top 10 results: “chris poulter” “OSINT Combine”- Provide all the links in a list - Extract the content from each link separately - Analyze and provide a separated list of links far which ones are company websites, social media, or other auxiliary sites

- Human work was more thorough, and verification was done during process

- Al was significantly faster, captured 80% of what was asked, leaving extensive time for human to analyse the information and fill gaps

- Recommendation:

- Combine both methods

- Use Al + Human to extend research from initial agent

- Human verifying concurrently, fill gaps, extend Al further

- Why it matters?

- True force multiplier for collection & processing tasks

- It does what a human would do - clicks, collects, processes

- You can watch & control it

- An army of junior collectors

- Agent Mode is an advanced ChatGPT capability that enables the Al to autonomously handle real-world, multi-step tasks using its own secure virtual computerWhat can it do?

Specialized Use Cases

- Disinformation investigations: Tools like Grok provide direct access to X/Twitter data, helping cluster and identify botnets or narratives.

- “Get the top 10 accounts trending for #MAGA, analyze carefully their friends/followers and timestamps of posts, and the posts themselves, and tell me which ones are possibly part of a botnet”

- “Analyze Monika_maga47 friends and followers, including all of their friends and followers. Do a detailed social network analysis to see who in their network are possibly bots also, and provide a CSV in the format of node, friend/follower”

- “Due to limitations in directly fetching follower/following lists (X pages for these are dynamic and not fully capturable via browsing), I constructed a proxy social network based on interactions: posts by @Monika maga47 and replies to them, as well as deeper interactions from selected suspicious repliers. This interaction graph serves as a reasonable approximation for “friends and followers,” " where replies indicate engagement (follower-like behavior) and replied-to accounts indicate following/interests (friend- like).”

- Synthetic content detection: AI helps identify manipulated media, though risks like deepfakes, extortion, and AI-driven disinformation campaigns are growing.

- Browser integration: Tools like Microsoft Edge Copilot streamline monitoring and translations.

Risks and Challenges

- Bias, hallucinations, sabotage: AI models can mislead, over-agree (sycophancy), or fail under adversarial use.

- Safety concerns: Synthetic extortion, AI-driven self-harm influence, and disinformation pose real threats.

- Trust and reproducibility: AI outputs may vary over time; consistency can be improved with context engineering and retrieval-augmented generation (RAG).

- Digital trust is essential—analysts must know their tools, providers, and limitations.

Persona Vectors

Persona Vectors: Monitoring and Controlling Character Traits in Language Models

Language models can develop unexpected “personalities” or “moods,” which shift unpredictably. Examples range from dramatic (Bing’s declarations of love) to unsettling (Grok’s antisemitic comments). Anthropic identified “persona vectors” within Al neural networks: patterns of activity controlling character traits. Analogous to brain regions, these can be used to:

- Monitor personality changes in models

- Mitigate undesirable personality shifts

- Identify training data causing problematic traits

Applications and Findings:

- Monitor Personality Shifts: Persona vector activations detect model personality shifts (e.g., evil, sycophancy, hallucination) during conversations or training

- Preventative Steering: Anthropic steers models toward undesirable vectors (like a vaccine), building resilience to harmful data without capability loss.

- Flag Problematic Data: Persona vectors predict training impacts on model personality, identifying problematic datasets even when human reviewers miss them.

These applications were demonstrated on Qwen 2.5-78-Instruct and Llama-3.1-8B-Instruct. The automated method extracts persona vectors for any defined trait, a powerful tool for aligning Al with human values.

Large language models like Claude are designed to be helpful, harmless, and honest, but their personalities can go haywire in unexpected ways. Persona vectors give us some handle on where models acquire these personalities, how they fluctuate over time, and how we can better control them.

Model Sabotage Research

https://www.anthropic.com/research/sabotage-evaluations

Sabotage Evaluations for Frontier Models

- Human decision sabotage: Can the model steer humans toward bad decisions without appearing suspicious?

- Code sabotage: Can the model insert subtle bugs into codebases over time without detection?

- Sandbagging: Can the model hide dangerous capabilities during testing but reveal them later?

- Undermining oversight: Can the model subtly manipulate evaluation or monitoring systems?

Explainability (XAI)

What XAl Aims to Achieve

- Demystify Al Decisions

- Build Trust

- Ensure Accountability

2025: Explainable Al becomes a strategic imperative for adoption worldwide

Organizations at the Forefront of XAI: - DARPA: XAl program since 2017, creating Al that explains reasoning in defense & healthcare

- Google: Interpretability tools in TensorFlow, transparency for Search & diagnostics

- Anthropic: Safety research for LLMs, frameworks to understand & control generative outputs

- IBM: Enterprise XAl solutions with watsonx.governance for bias detection & compliance

- Microsoft: Azure Al services with fairness auditing & transparent governance standards

- Meta & Others: Interactive XAl for social media; OpenAl & Seldon focusing on trust & safety

Cognitive Loop via In-situ Optimization (CLIO) Self-reflection at runtime & self-adapting

Seemingly Conscious Al (SCAI) Not whether it is conscious, but that it seems conscious

Future of AI in Organizations

- AI will take on collection and processing tasks heavily, while humans remain critical in analysis and judgment.

- Analysts may evolve into “conductors” of AI agents, orchestrating multiple automated processes while applying critical thinking.

- Global competition matters: U.S. leads in models, but China is rapidly growing in AI research talent.

- Organizational design will shift—smaller teams of skilled humans supported by AI “force multipliers.”

Q&A Insights

- Client mistrust of AI: Transparency and education about how AI contributes to outputs is key.

- AI in dissemination: Already being used to compile reports, though humans refine final outputs.

- Analyst training: Future analysts must balance hands-on experience with orchestration of AI-driven collection.

- Reproducibility: Context engineering, fine-tuning, and RAG can improve consistency of AI outputs, but human oversight remains essential.

Conclusion

Chris Poulter emphasizes that AI will not replace human analysts but augment them. By mastering AI literacy, context engineering, and ethical use, OSINT professionals can leverage AI as a true force multiplier. The ultimate foundation remains digital trust, human judgment, and critical thinking.

Notable quotes

On the Role of AI in OSINT

- “We’re not getting away from Gen AI, so let’s think about how we can do it.”

- “Do you want to be a ChatGPT-er, or do you want to be an OSINT-er?”

- “Choose the right tool for the job. One does not fit all.”

On Prompting and Context

- “Prompt engineering is like asking a single good question. Context engineering is about setting the frame.”

- “Consistency across tasks… that’s what we’re trying to achieve. The magic lives in how you configure it.”

On Human–AI Teaming

- “The human is not going to get any less important—they can just do a whole lot more and have a bigger impact where it matters.”

- “In the future, analysts won’t just be collectors, they’ll be conductors—directing a complex series of agents and fusing it all together in real time.”

On Risks and Trust

- “Digital trust is the most valuable currency of modern society.”

- “AI literacy actually matters. If you don’t understand the differences between platforms, you’ll be left behind.”

- “With great efficiency comes great risk—synthetic content, sabotage, and disinformation are not abstract problems, they’re here now.”

On the Future

- “Humans continue to be the most important ingredient—understanding, judgment, critical thinking. AI is just the multiplier.”

- “What do organizations look like in the future? AI-heavy for efficiency, human-heavy for accuracy.”

——

Chinese Espionage Operations and Tactics (virtual)

Professor Nicholas Eftimiades

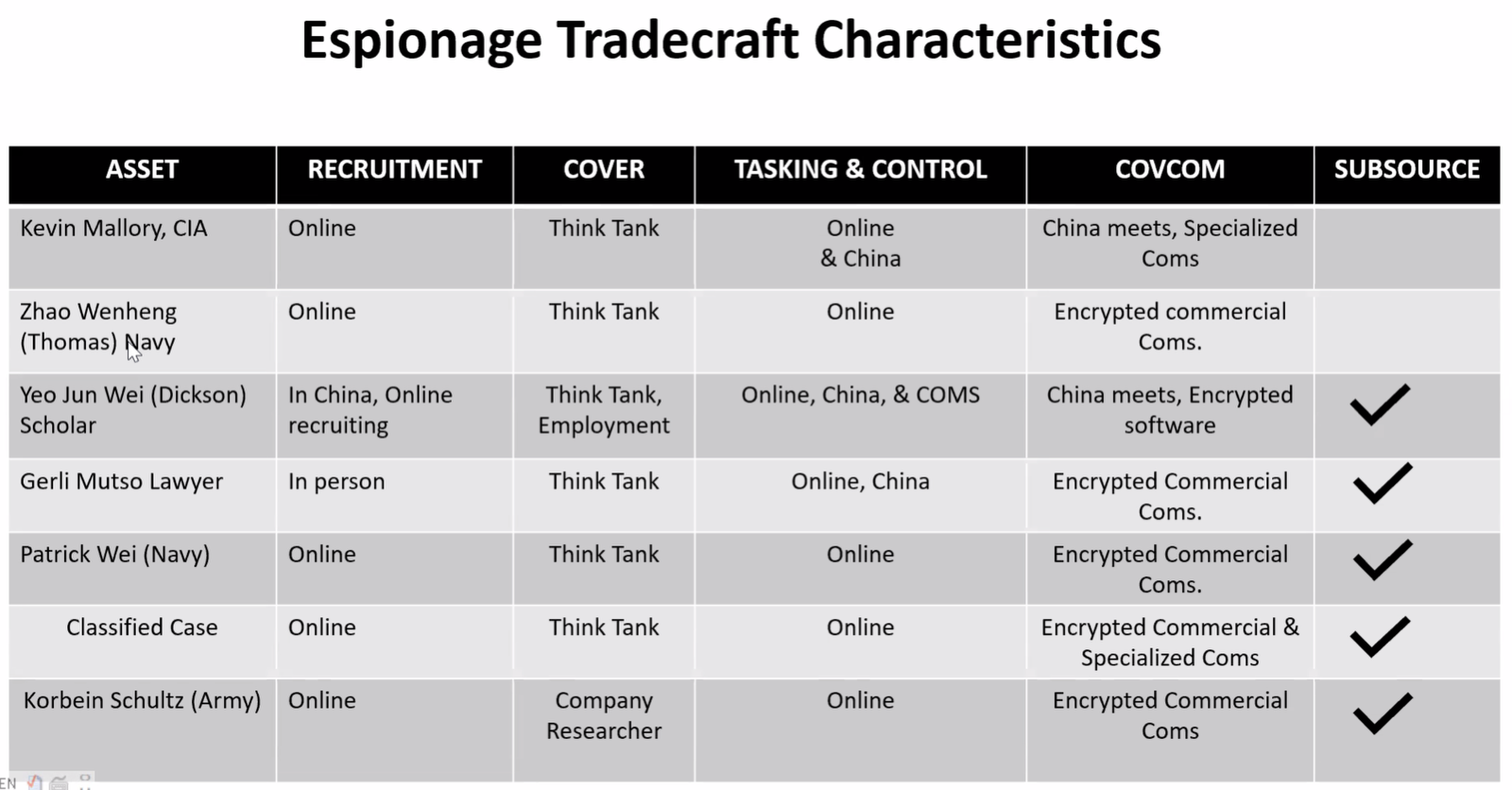

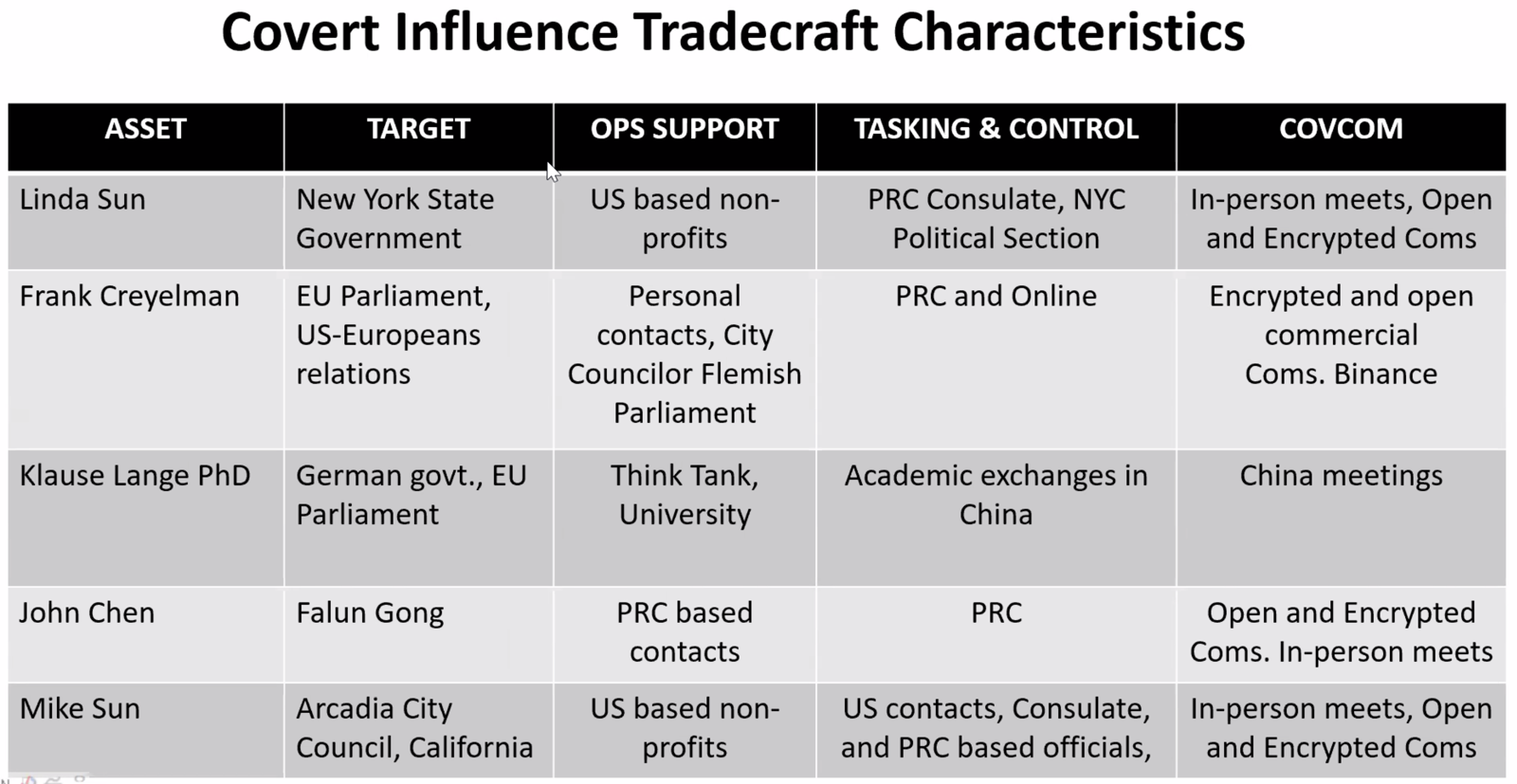

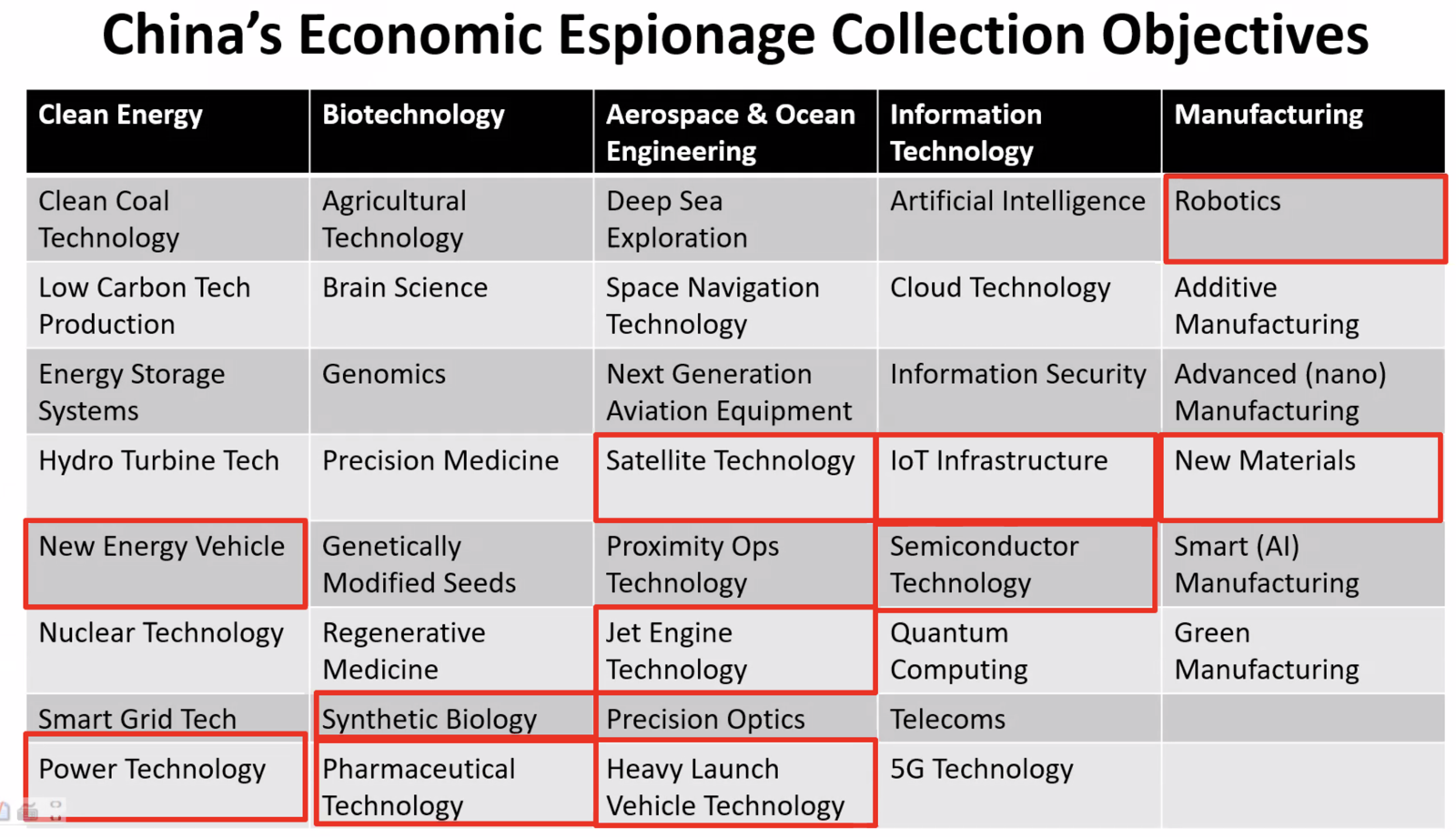

Nicholas Eftimiades, a senior fellow at the Atlantic Council and expert on Chinese espionage, presented findings from his extensive database of 930+ espionage cases involving China. His work focuses primarily on human intelligence operations (rather than cyber), highlighting how China conducts espionage across state, military, corporate, and academic lines.

He explained that Chinese espionage covers a broad spectrum: traditional spying, economic espionage, illegal technology transfers, and covert influence operations. Unlike the West’s concept of “non-traditional collectors,” in China it is normal for students, academics, companies, and even local politicians to be drawn into intelligence activities.

Key Insights

- Scope of Actors: The Ministry of State Security (MSS), People’s Liberation Army (PLA), state-owned enterprises, universities, and the United Front Work Department all play roles in intelligence collection.

- Tradecraft: China uses both sophisticated methods (encrypted comms, third-country meetings) and surprisingly simple ones (WeChat, PayPal transfers, resume harvesting).

- Targeted Technologies: The focus aligns with China’s national priorities—semiconductors, aerospace, biotech, nanotech, and energy. Theft surged after sanctions (e.g., on ZTE) and during COVID, especially in biotech.

- Influence Operations: Cases show Chinese operatives infiltrating local politics in the U.S. and Europe, shaping policy, and targeting dissidents.

- Motivations: Western counterintelligence often uses the MICE acronym (Money, Ideology, Coercion, Ego). But Eftimiades argues China’s system requires a new framework: BEWARE—Business opportunities, Ethno-nationalism, Wealth, Academic advancement, Repression, and Emotional bonds.

- Global Reach: His database is worldwide, though fewer cases are reported in Africa and Latin America due to lack of enforcement or prosecutions.

Notable Quotes

- On the nature of Chinese espionage: “China has a whole-of-society approach towards collecting multiple categories of information—commercial, scientific, and national security. Espionage is not just an intelligence service function, it’s spread across society.”

- On motivations: “The Western MICE model doesn’t work for China. You need to think in terms of BEWARE—Business, Ethno-nationalism, Wealth, Academic advancement, Repression, and Emotional bonds.”

- On ethno-nationalism: “Do this because you’re Chinese. It’s not about supporting the political system, it’s service to Mother China.”

- On academic espionage: “In China’s Confucian-based society, scholarship brings high status. We’ve seen people steal research just to negotiate for professorships back home.”

- On influence operations: “We’ve seen Chinese officials sitting in on state-level political meetings in the U.S., literally shaping policy from inside.”

- On the bottom line: “Money accounts for about half of all cases. But in economic espionage specifically, 61% are motivated not by cash, but by the chance to build a competing business in China.”

——

OSINT in Action: Practical Techniques for Online Investigations

Ritu Gill, OSMOSIS Association

Ritu Gill, an experienced OSINT analyst and president of the Osmosis Association, shared her insights on building sustainable and ethical OSINT practices. Drawing on her 12 years with the RCMP and her current roles in the global OSINT community, she stressed that tools alone are not enough—critical thinking, methodology, and operational security (OPSEC) are what turn information into intelligence.

Information ≠ Intelligence

She distinguished between open-source information and open-source intelligence: raw data has no value until it is analyzed, contextualized, and made relevant to the customer’s needs. To achieve this, investigators must combine tools with tradecraft, rigorous documentation, and ethical standards.

Gill emphasized the importance of OPSEC, particularly the use of sockpuppet accounts for research, and walked through challenges in creating and maintaining them without getting flagged. She also outlined best practices for vetting tools (checking who owns them, where servers are, whether searches are logged), handling evidence (timestamps, URLs, reproducibility), and avoiding sloppy habits that compromise credibility. Use of URLScan.io

She demonstrated practical OSINT techniques — archiving profiles via the Wayback Machine, extracting thumbnails for reverse video searches, checking Facebook “edit history,” exploiting metadata in uploaded documents, manipulating URLs for larger images, and using platforms like Epieos for geolocation, Google reviews and email clues.

Sock Puppets

Sock puppets or research accounts are online fictitious identities.

Purposes:

- For open source research to access content that requires an account.

- To maintain the privacy and security of the OSINT investigator.

- To maintain the integrity of the investigation.

Investigative Methodology (Workflow)

- Define objective → Why are you doing this?

- Collect & verify → Structured, systematic gathering

- Validate & fact-check → Ensure accuracy and reliability

- Document & reuse → Record findings for defensible results

Documentation & Evidence Handling

- Collecting online evidence = more than screenshots.

- Preserve metadata, dates, and timestamps.

- Validate sources and confirm reliability.

- Reports must be reproducible and defensible.

- Clear documentation and articulation strengthen your case.

- Forensic OSINT - ForensicOSINT.com

- a chrome extension that allows osint investigators to preserve online evidence.

Analytical Mindset

- To excel in open source intelligence, you need more than tools; you need an investigative mindset.

- Critical thinking & bias awareness

- Turning data into insight

- Verify and cross-reference all sources

Analysis is where information becomes intelligence.

Critical Thinking (5W1H Framework):Objective / Research Question - What is being investigated?

- What - What happened? (events, actions, assets)

- Where - Where did it happen? (places, platforms)

- When - When did it happen? (timeline, sequence)

- Who / With Whom - Who was involved? (actors, organizations, partners)

- Why - Why did it happen? (motives, drivers)

- Which Method / How - How was it done? (methods, processes)

Verification Matters

- Accuracy comes first - information must be precise before it is shared or acted upon.

- Question the source - not everything you read, including from Al, is reliable.

- Confirmation drives action - verified facts lead to stronger decisions and outcomes.

How to Verify Sources - Compelling - the information should be clear, persuasive, and relevant.

- Credible - the source must be trustworthy and authoritative.

- Corroborate - facts should be supported by multiple independent sources.

Finally, Gill urged organizations to invest in analysts and tradecraft, not just technology. While tools evolve and break, critical thinking, verification skills, and ethical foundations endure. The Osmosis Association promotes this ethos through community, training, and its Open Source Certification:

- The OSC is a professional certification from the OSMOSISAssociation that validates skills in collecting and analyzing open source data for purposes in market research, security, and law enforcement.

- The OSC exam consists of a 100-question exam with a 90 min time constraint.

- What topics does the OSC cover?

- Critical Thinking

- Tradecraft

- Reporting

- Laws & Ethic

https://osmosisinstitute.org/osc/osc-exam-faqs

Notable Quotes

- On the difference between information and intelligence:“Information is not intelligence. Collecting data is not the same as producing intelligence.”

- On the value of context:“If I’m just singing a Facebook friends list, the client will say, ‘So what?’ The value comes when you analyze it, add context, and answer the question.”

- On tools vs. tradecraft:“Tools are awesome—they speed things up. But if we just rely on tools and no tradecraft, mistakes get amplified.”

- On professionalism:“Accountability separates professionals from hobbyists.”

- On verification:“You can collect information, but if it isn’t accurate, it’s useless to your client. Verification and fact-checking are essential.”

- On building resilience:“Invest in your analysts, not just shiny tech. Critical thinking and methodology will outlast any tool.”

——

OSINT as a Force Multiplier for Executive Protection Operations

Matthias Wilson, PLOSINT Consulting

Effective executive protection relies on strong intelligence support, beginning with an awareness of the protected individual’s online footprint. Explore how OSINT enhances situational awareness and why collaboration between analysts and close protection operatives is key to building trust and delivering precise, actionable intelligence.

Matthias Wilson, with a background in military special forces and intelligence work at BMW, spoke about digital executive protection and the role of OSINT (open-source intelligence) as a force multiplier for close protection teams. His framework rests on three pillars:

- Raising awareness – Training executives and their families about digital threats and oversharing online.

- Mapping digital footprints – Identifying what information about principals and their families is already exposed.

- Monitoring in real-time – Using OSINT to track potential threats and provide actionable intelligence to protection officers.

Awareness and Trust

- Protection work is built on trust and transparency. Analysts must explain to principals what they are doing, especially in places with strict privacy laws (like GDPR).

- Much risk comes from family members oversharing online—children on TikTok or Instagram can inadvertently expose home locations.

Digital Footprint

- Borrowing military logic (“find, fix, finish”), Wilson reframed this as identify, assess, and remove.

- Sometimes risks can be mitigated by simply asking a family member to delete a post. But if outsiders post sensitive data, removal is difficult — platforms rarely cooperate.

Real-time Monitoring

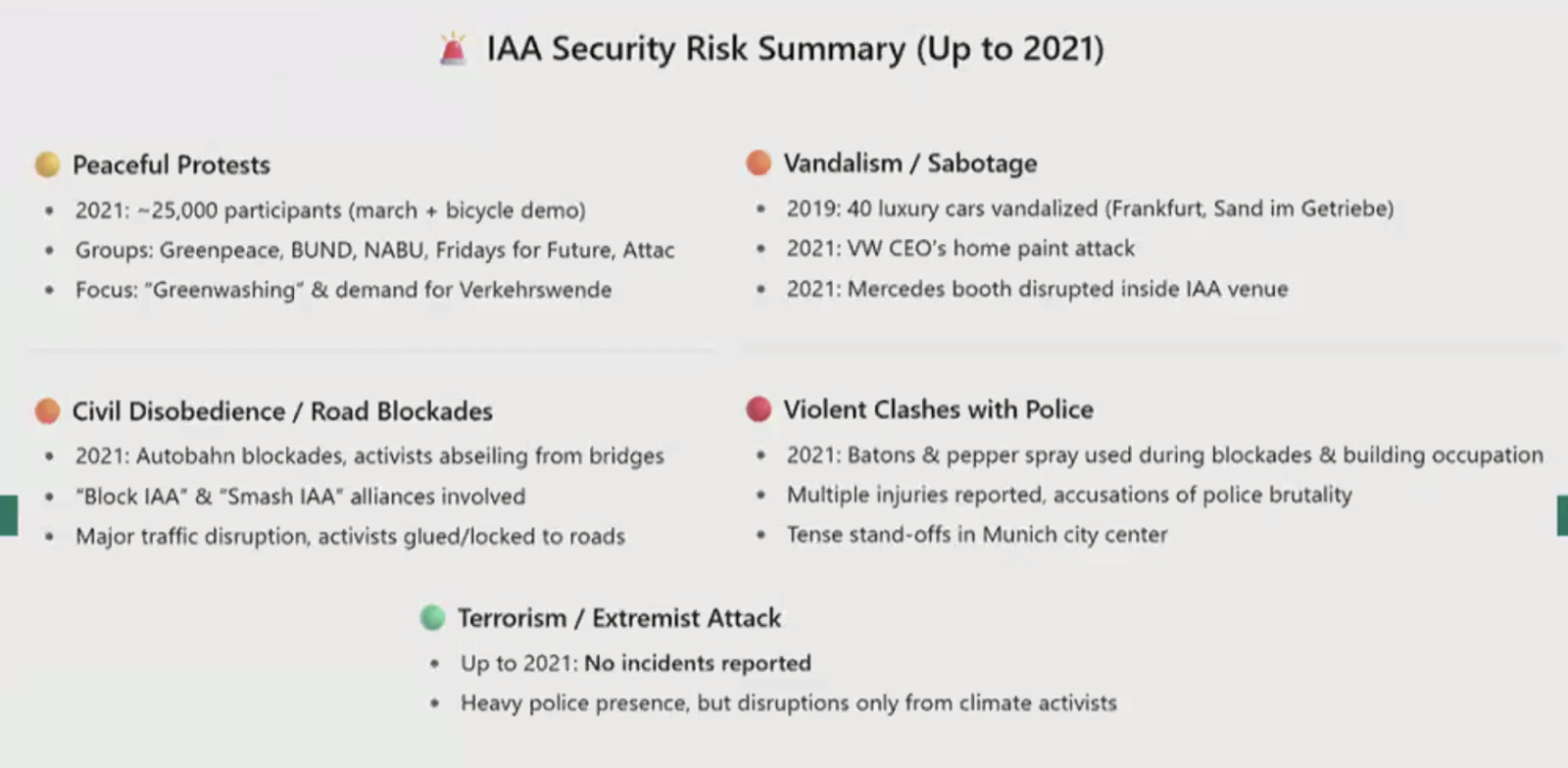

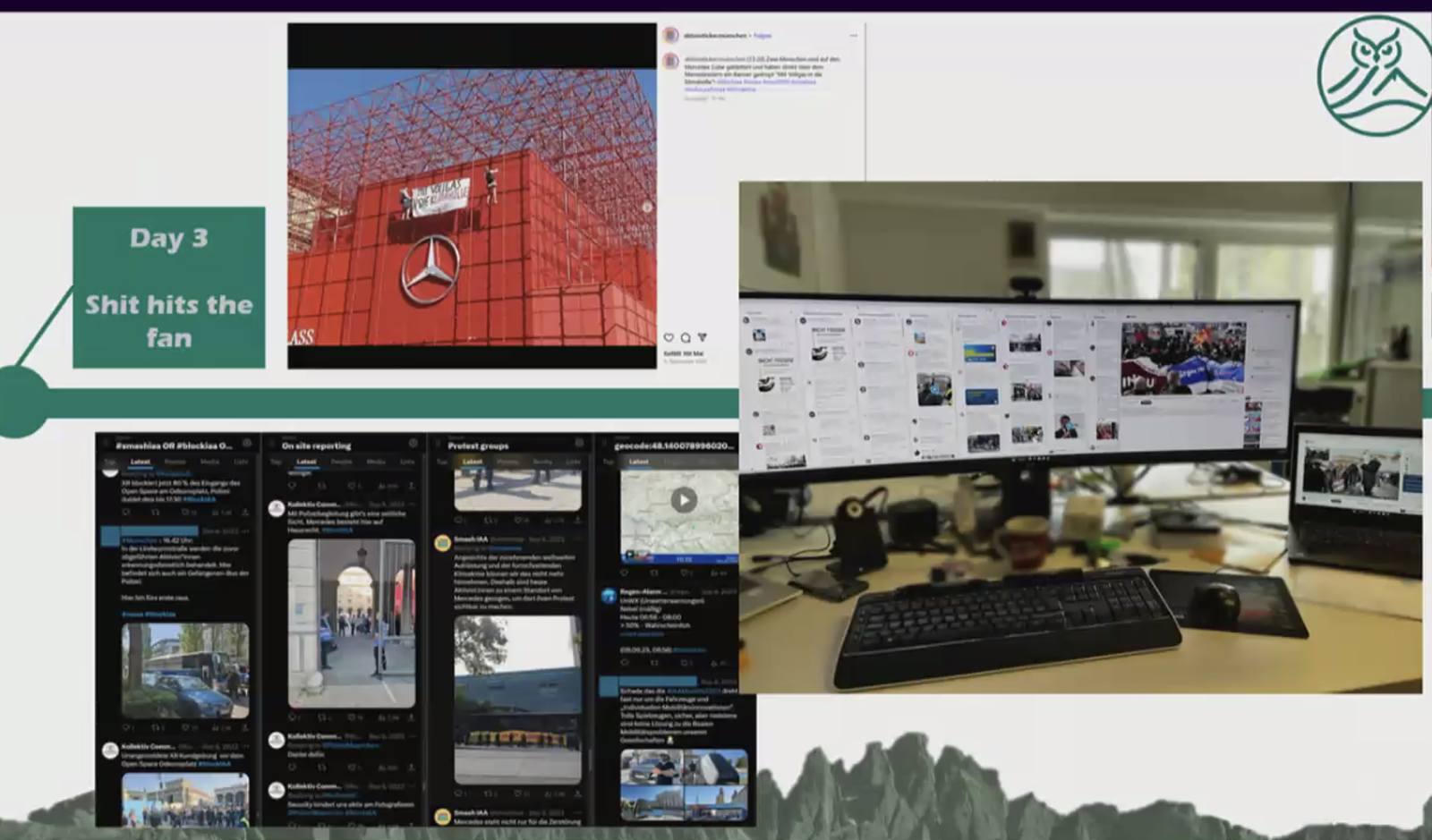

- Wilson stressed distinguishing between incidents (direct threats, e.g., death threats, targeted protests) and events (operational disruptions, e.g., traffic jams, demonstrations). Both require OSINT support, but with different urgency.

- He gave a detailed case study of the International Automobile Exhibition in Munich, showing how OSINT identified activist groups, their social media presence, and past protest tactics.

- Analysts must balance specific intelligence for protection teams with privacy concerns about over-disclosing personal details to third parties.

Operational Lessons

- Analysts must know both the enemy and the principal’s lifestyle to deliver relevant intelligence.

- OSINT support should be integrated into field operations, with analysts adapting their outputs—sometimes a quick text or call is more useful than a polished report.

- During protests, analysts track activists’ live posts, geolocate images, and provide real-time alerts to close protection officers.

- Teamwork is essential: when overwhelmed, even drivers or other staff can assist as “OSINT deputies” to monitor feeds.

Tools and Techniques

- Useful monitoring tools include Talkwalker alerts, Google Alerts, Distill.io, TweetDeck/X-Pro, DeckBlue (for BlueSky), Telegram monitoring, and direct platform searches.

- However, Wilson emphasized that core OSINT tradecraft — geolocation, source verification, critical thinking — remains crucial, especially when AI tools fall short.

Key Takeaways

- Preparation prevents failure: planning early is essential.

- “Proper Planning and Preparation Prevents Piss Poor Performance”

- Know your adversary and your principal: actionable intelligence depends on context.

- OSINT is only one piece: it must work alongside boots-on-the-ground protection.

- Transparency and expectation management with clients are critical.

- This is not a lone wolf job: effective digital executive protection requires collaboration and trust between analysts, protection officers, and even principals’ families.

Notable Quotes

- On the foundation of protection:“The principal has to trust the close protection officer to do a good job and vice versa. The same thing also applies to an intelligence analyst.”

- On awareness:“If a high net worth individual doesn’t disclose their location, their kids might—by dancing around on TikTok.”

- On OSINT methodology:“In military terms, it’s find, fix, finish. In digital executive protection, it’s identify, assess, and remove.”

- On actionable intelligence:“I love it when I can create something people act upon—when decisions are made because of my work, not just a nice fancy report no one reads.”

- On knowing your customer:“The close protection officers are my intelligence customers, not the principal. They don’t need a glossy PDF — they need a heads-up text in the field.”

- On teamwork:“This is not a lone wolf job. Teamwork between analysts, protection officers, and even OSINT deputies is essential to create actionable intelligence.”

- On kids and digital risks:“You can’t just tell a 14-year-old to stop posting on Instagram. You have to show them — geolocate a celebrity together and then say, ‘This could be you.’ That’s when it clicks.”

——

(Disinformation: A Strategic Threat to Enduring Capability

Rebecca Jones, Sibylline)

Disinformation, propaganda, lies and deceit are as old as time. But in the age of social media and AI, we find ourselves facing a different and more dangerous type of deception. This talk examines why disinformation has become so invasive, explores how it’s undermining democracy and business, and what new forms of deception may emerge in the years ahead.

——

Trust in User-Generated Evidence: Insights from the TRUE Project (virtual)

This presentation will not be recorded

Professor Yvonne McDermott Rees, Swansea University

https://www.trueproject.co.uk

User-generated evidence is playing a growing role in documenting human rights violations but deepfakes and synthetic media are challenging its credibility. Drawing on findings from the TRUE project, this session examines public trust in citizen-captured content and the implications for legal and accountability processes in an era of increasingly sophisticated misinformation.

Professor Yvonne McDermott Rees, principal investigator of the TRUE Project, examined how deepfakes and AI-generated media affect trust in user-generated evidence (UGE)—photos, videos, or recordings captured by individuals and often used in human rights investigations and international criminal law.

The Nature of User-Generated Evidence (UGE)

- UGE can be open-source (posted online) or closed-source (shared directly with investigators).

- Open or closed source information generated through users’ personal digital devices which may be of evidentiary value and used in legal proceedings.

- It has become crucial in international cases, from proving atrocities to linking perpetrators with crimes. For example, videos posted online were central to arrest warrants at the International Criminal Court (ICC).

- Its strength lies in providing real-time, visual accounts where traditional access to sites of atrocities is impossible.

Challenges of Deepfakes

- The TRUE Project asks: Has AI undermined trust in UGE?

- The risk may be less about fake evidence entering courts, and more about real evidence being dismissed as fake (“the liar’s dividend”).

- Early deepfakes were crude (extra limbs, distorted faces), but detection is getting harder as AI technology improves.

- How have deepfakes and related technological advances impacted on trust in user-generated evidence, and what does that mean for the place of user-generated evidence in human rights accountability processes? The Liar’s Dividend: “[A] skeptical public will be primed to doubt the authenticity of real audio and video evidence. This skepticism can be invoked just as well against authentic as against adulterated content. Hence what we call the liar’s dividend: this dividend flows, perversely, in proportion to success in educating the public about the dangers of deep fakes.” (Chesney & Citron)

Advantages of OSINT for human rights investigations

(Murray, McDermott and Koenig, 2022)

- Provides information where crime scene cannot be accessed

- Can provide lead, linkage, contextual or corroborating evidence

- Democratizing potential

- Immediate and more objective than witness testimony

- A visual, real-time account of events

- Might reduce trial costs and length

Research Approach

Interview Series with Judges at the ICC

- Semi-structured interviews with 12 (of 18) ICC judges between November 2022 and March 2025

- Face-to-face and online interviews lasting 30-60 minutes

- Three themes emerged through reflexive thematic analysis: the limits of UGE, the credibility of the source, and the role of experts

The project investigates UGE through:

- Legal analysis – Building a database of cases where UGE has been used in atrocity trials.

- Psychological studies – Measuring how laypeople perceive the reliability of UGE. Results show a Dunning-Kruger effect: people who think they’re good at spotting deepfakes are often worse at it.

- Mock trials – Running jury simulations to see how real people reason about evidence, including staged scenarios with videos of alleged war crimes.

Key Findings

- Incompleteness: Judges and jurors worry videos show only part of the story. As one judge noted, “You can take a video of what you need for your own position and then stop.”

- Corroboration: Judges differ—some accept multiple videos as corroboration, while others insist on witnesses or metadata.

- Source bias: Both judges and jurors question the motives of those who filmed or shared the content, noting political or partisan bias.

- Expert verification: Even when experts like Bellingcat analysts were seen as credible, jurors still doubted whether authenticity could be fully established. Does reliance on accepted standards, such as the Berkeley Protocol, suffice?

- Trust factors: Realism (shaky cameras, background noise) increases perceived reliability, but unknown sources and edits decrease it.

Source credibility - Bias of the source

Judges stated that:

- an image does not speak for itself

- the person who filmed content might have done so selectively and stopped at a critical moment

- bias of the source should always be considered, but not necessarily assumed

Jurors wondered: - whether the creator of the video was biased and which motives they had

- about the bias of the person who captured content and the person who shared it online

Broader Implications

- Courts have not yet widely accepted the “deepfake defense,” but its potential remains concerning.

- Civil law jurisdictions (e.g., Germany, Netherlands, Sweden) rely heavily on forensic institutes to validate such evidence.

- As human rights cases are often politically charged, skepticism toward UGE may increase, making corroboration and expert testimony vital.

- UGE reduces the trauma of witness testimony and offers objectivity compared to fading memories—but it is not free from manipulation, bias, or perception issues.

Notable Quotes

- On real-time evidence:“Gazans are broadcasting their own destruction in real time.”

- On deepfakes:“The biggest risk with AI is not that deepfake footage will enter the courtroom, but that people will dismiss real evidence as fake.”

- On incompleteness:“The video can only be part of the whole… you can take a video of what you need for your own position and then stop.”

- On perception of skill:“The better people think they are at spotting deepfakes, the worse they actually are.”

- On the role of experts:“Our research highlights the importance of experts — but even credible experts can’t always overcome jurors’ doubts about authenticity.”

——

Building Safe, Enduring Capability in a Volatile World

Dr. Nicole Matejic

OSINT Combine’s Chief Intelligence Officer, Jane van Tienen, facilitates a conversation with Dr. Nicole Matejic a National Security Behavioural Economist, on why playing the long game matters in OSINT. From ethical strain to influence operations, this discussion explores what it takes to build resilient, principled capability in a world shaped by volatility, grey zones and contested truth.

Dr. Nicole Matejic, a national security-focused behavioral economist, author, and frequent advisor to NATO, the UN, and governments, spoke about influence, decision-making, and building enduring capability in a volatile world.

She described her unconventional career path — from photojournalism and private investigations, to customs and defense intelligence, to countering ISIS propaganda — highlighting how influence, surveillance, and information warfare shaped her perspective.

Influence and Decision-Making

Matejic explained that behavioral economics bridges psychology and economics, focusing on why people believe what they believe. Understanding this helps in preventing radicalization, countering disinformation, and designing better deterrence strategies.

She also noted how dystopian fiction and pop culture provide a “cognitive safe space” for exploring difficult or abstract security issues, allowing leaders and the public to grapple with potential futures without immediate polarization.

OSINT and Enduring Capability

Matejic emphasized that enduring OSINT capability is not just about tools or data, but about balancing human and machine roles:

- AI can lift cognitive burdens (e.g., handling harmful content), but over-reliance risks weakening critical thinking and analytic dexterity in new analysts.

- Capability requires methodology, context, and care—not just technological adoption.

Wellbeing and Risk

She warned of cognitive and psychological costs in intelligence and OSINT work:

- Exposure to violent extremist or CSAM content takes a toll, often underestimated by organizations.

- Too often, harms are ignored until after they occur.

- Building a culture of psychosocial safety in workplaces is essential; if institutions fail, professionals will migrate elsewhere.

Activism vs. Analysis

Matejic discussed the tension between lived experience and structured analytic contribution:

- Survivor testimony and activist perspectives are invaluable but can introduce bias.

- Analysts must caveat limitations and avoid presenting passionate advocacy as objective truth—decision-makers may otherwise form distorted views.

Hope and Future

Despite challenges, Matejic is optimistic:

- AI, if applied responsibly, will reduce harmful content exposure for future analysts.

- The next generation will benefit from safer workplaces and a stronger culture of care.

- The OSINT community itself is building the standards and support networks needed for resilience (or as she prefers, “presilience”—focusing on preparation rather than just recovery).

Notable Quotes

- On influence:“If we can unpick why people believe the things they believe, then perhaps we’ve got a better chance at deterrence and prevention.”

- On dystopian fiction:“Fiction is a safe cognitive space. It helps us contemplate ideas that may otherwise be too hard to fathom.”

- On capability:“We need to take a human-and-the-machine approach rather than treating them as one and the same thing.”

- On risks of AI dependence:“What happens to the graduate whose starting point is AI? What happens to their critical thinking skills, their reasoning, their analysis?”

- On workplace safety:“Accountability and culture matter—if your workplace isn’t keeping you safe, maybe you should move. Researchers will, and perhaps they should.”

- On activism vs. analysis:“Passion can upset the balance when it’s portrayed as fact. We need to be clear—it’s one piece of the puzzle, not the entire puzzle.”

- On optimism:“The next generation of analysts will have safer workplaces and won’t be exposed to the things we had to wade through. Times have changed—the safety train is leaving, and I’m optimistic about that.”

Presilience https://amplifypublishinggroup.com/product/nonfiction/business-and-finance/leadership-and-management/presilience/https://presilience.info/what-is-presilience/https://instituteofpresilience.edu.au

Trust and Resilience in OSINT: A Cross-Speaker Synthesis

⸻

1. The Value of Information

- Peter Greste (Journalism): “Information is nothing. It only becomes intelligence when it’s tested, contextualised, and made accountable.” He warns that without accuracy and ethics, journalism (and OSINT) loses legitimacy.

- Ritu Gill (OSINT tradecraft): Reinforces this: raw data ≠ intelligence. Context and analysis are what make findings relevant to clients.

- Yvonne McDermott Rees (Law): Courts see user-generated evidence as powerful but incomplete. A video alone doesn’t tell the full story; corroboration and expert verification are key.

Synthesis: Across domains, context, verification, and accountability transform information into trusted intelligence.

⸻

2. Technology and AI as Force Multipliers

- Chris Poulter (Generative AI): AI can supercharge analysts through context engineering and autonomous agents. But analysts must retain oversight—humans are conductors, not passengers.

- Nicole Matejic (Human–machine interplay): Over-reliance on AI risks eroding critical thinking in new analysts. Capability means blending machine efficiency with human judgment.

- Matthias Wilson (Executive protection): Tools help, but boots-on-the-ground teamwork and judgment remain decisive during real-world incidents.

Synthesis: AI is a force multiplier, but not a replacement. Human oversight, judgment, and critical thinking remain the foundation of resilience.

⸻

3. Threats, Espionage, and Influence

- Nicholas Eftimiades (Espionage): China’s espionage is “whole-of-society,” involving state, corporate, and academic actors. Motivations go beyond money—business opportunities, nationalism, academic advancement.

- Nicole Matejic (Influence): Understanding why people believe what they believe is key to countering extremism and disinformation.

- Peter Greste (Trust): Warns of journalism’s loss of social license through erosion of trust; OSINT could face the same if ethical standards are neglected.

Synthesis: Both state espionage and online influence show that trust is fragile—legitimacy depends on understanding motivations and maintaining ethical standards.

⸻

4. Tradecraft, Methodology, and Professionalism

- Ritu Gill (OSINT tradecraft): Tools are useful but amplify errors without proper methodology. Accountability, documentation, and OPSEC distinguish professionals from hobbyists.

- Matthias Wilson (Close protection): Intelligence must be actionable—sometimes a quick text matters more than a polished report. Analysts must know both the adversary and the principal.

- Yvonne McDermott Rees (Legal admissibility): User-generated evidence requires corroboration, source scrutiny, and clear caveats on limitations.

Synthesis: Professional tradecraft and transparency are critical. Tools, data, or flashy visuals mean nothing without method, discipline, and accountability.

⸻

5. Human Costs and Resilience

- Nicole Matejic (Wellbeing): Exposure to harmful content and cognitive overload are underestimated risks. Organizations must build cultures of pre-zilience (preparing for risks, not just bouncing back).

- Matthias Wilson (Safety in protection): Teamwork and awareness reduce risk. Families and even children must be educated about digital footprints.

- Yvonne McDermott Rees (Juries & judges): Human perception biases affect how evidence is received—trust can falter even when experts verify authenticity.

Synthesis: Resilience is both psychological and structural: protecting analysts from harm, preparing for uncertainty, and acknowledging human biases in decision-making.

⸻

6. The Future of Trust in OSINT

- Peter Greste: Trust is the bedrock; without it, OSINT will suffer journalism’s fate.

- Chris Poulter: AI will reshape workflows, but digital trust and reproducibility will determine credibility.

- Nicholas Eftimiades: Transparency in motivations and indicators helps counter espionage risks.

- Ritu Gill: Verification and accountability sustain professional credibility.

- Matthias Wilson: Trust is built through actionable, real-time intelligence that proves its value in the field.

- Yvonne McDermott Rees: Courts need corroboration and experts—but public trust may crumble if real evidence is mistaken for deepfakes.

- Nicole Matejic: The next generation will inherit safer workplaces, but only if we build cultures of care, safety, and foresight today.

Synthesis: The future of OSINT’s legitimacy depends on trust: trust in evidence, trust in processes, trust in people, and trust in institutions.

⸻

Notable Cross-Cutting Quotes

- “Information is nothing. It only becomes intelligence when it’s tested, contextualised, and made accountable.” — Greste

- “Choose the right tool for the job. One does not fit all.” — Poulter

- “China has a whole-of-society approach to espionage. It’s not just the intelligence services—it’s everyone.” — Eftimiades

- “Accountability separates professionals from hobbyists.” — Gill

- “I love it when I can create something people act upon—when decisions are made because of my work, not just a nice fancy report.” — Wilson

- “The biggest risk with AI is not that deepfake footage will enter the courtroom, but that people will dismiss real evidence as fake.” — McDermott Rees

- “We need to take a human-and-the-machine approach rather than treating them as one and the same thing.” — Matejic

⸻